Exe0.2 Eric Snodgrass

//a direct continuation, in draft form, of earlier text for *.exe (ver0.1) gathering in preparation and for discussion at *.exe (ver0.2) meetup

sites of execution: interstice

Execution, in its computational sense, might described as the carrying out of a set of operative instructions by a machine that in a mode of execution is able to run without any required interventions into the nature of its decidability. From such a perspective, execution is any ongoing event of automation, however minute or extensive this period of execution time (or “runtime”) might be. Automation in this sense is an ongoing process of executability, in which instructions as well as inputs and outputs can be continuously decided and acted upon. Crucially, execution is not merely the computability of something but the actual practice and automated execution of this computability in the world. Each instance of execution bringing the wound up velocities of its logical abstractions into contact with the frictional and situated materials of its actualised encounters.

This points to the pincer-like quality of execution hinted at earlier. The way in which it brings computability and materials for its executed computation into direct and active interaction with one another, creating in the process what Howse identifies as “sites of execution” via the kinds of “agential cuts” that Barad elucidates. In the case of automated execution, the agential cuts involved will include the particular contours of logic and computability as they have been compiled into the executing machine code and its related moving physical parts. And in respect to a site of execution, one might draw from von Uexküll’s Umwelt to characterise sites as the material points of interaction (or even piercing collisions) of these agential cuts as they inscribe and articulate their perceptual-effectual qualities in the already active ecologies of execution within which any particular executions take place.

As Barad and Howse’s work is quick to point out, the very notion of isolating certain kinds of “sites” of execution is readily problematiseable. In attempting to temporarily isolate such sites of execution as well as a definition of execution itself, one aim here is to interrogate the notion of execution by moving from levels of abstraction and into their situated forms and enactments. Thus the examples of the tick or Howse’s work are highlighted because of the way in which they foreground particular sites of material execution, with the focus in these initial examples given to a sense of marks on bodies, and the fairly precisely defined points of piercing execution in relation to a body.

But execution in the world is a knotty, complex thing, and there are further productive ways in which one might consider the notion of a site of execution. Foucault’s employment of the concept of “interstice” is one such helpful resource. The term appears in Foucault’s essay and outline of what constitutes a genealogical approach, “Nietzsche, Genealogy, History” (originally published in 1971). For Foucault (at this juncture in his thinking), genealogy should treat the history of any emergent interpretations as a continued interplay and contestation of processes of "descent," "emergence" and "domination," as they alight from one act of self-recognition and interpretation to another, via one body to another and one form of dominance and resultant struggle to the next. In speaking of emergence, Foucault describes how any study into the emergence of discursive processes should not be that of a speculation on origins, but rather a study of the “interstices” of existing (often confrontational) forces and how these interstices can be seen to provide traction or take on certain generative qualities. Interstitials of emergence are themselves formed from both existing energies of descent (the existing forces that have fed into sites of emergence) and domination (the ability of any existant or emergent discursive force to harden into stabilised, recognisable and thus enforceable forms of expression). Foucault (1984, p.84-5), drawing directly on Friedrich Nietzsche’s genealogical method, describes a site of emergence as a “scene” where competing forces and energies “are displayed superimposed or face-to-face.” The interstice in this instance is a site of confrontation of such forces, it is “nothing but the space that divides them, the void through which they exchange their threatening gestures and speeches. . . it is a ‘non-place,’ a pure distance, which indicates that the adversaries do not belong to a common space.” In this formulation of Foucault’s then, an interstice is a scene, a superimposition and a confrontational void through which potentially competing forms of gestures and energies can be exchanged. The emphasis in this conceptual abstraction is not so much on the materiality of an actual site, but it nevertheless points to a sense of a key intersectional point of competing forces, for which the metaphor of an interstice provides the overarching rubric. Foucault makes reference in this same passage to Nietzsche’s term Entstehungsherd, which it is worth noting is typically translated as “site of emergence” [footnote 3].

Of particular interest in contrasting this notion of interstice with that of the direct shifting and launching of computational processes into the skin of a user or the soil of the earth in the works of Howse, is the way in which Foucault’s characterisation, while containing a similar notion of confrontation and interaction, nevertheless is characterised as a superimposition involving a “pure distance.” In Foucault’s site of the interstice then, the emphasis is shifted from direct contact to this distance between competing forces and the way in which it can create a drive of its own that can result in highly productive, generative form of interplay. To couch it in the terms of this essay, the focus in any such encounters in a site of execution then becomes just as much on the uncomputable as it is on the computable. And the interstice can also be seen to be the emergent site and breeding ground of a will to close this very gap.

As a simple example, consider the originary insterstitial gap that Turing’s (1936) machine model opens up with its mandate of discrete, symbolic elements capable of being enumerated and made into effectively calculable algorithms for execution upon and by machines. In the further materialization of Turing’s thesis into actual computing machines, the act of making things discrete, so as to be computable, becomes one of establishing machine-readable cuts. Specifically, these cuts are the switchable on and off state elements, or flip-flops that are executed via logic gates used to store and control data flow. Such flippable states constitute the material basis that allows for the writing and running of the executable binary instructions of machine code upon a computing machine. Although any manifestation of a Turing machine necessarily involves the storage and transcription of such symbols onto a (comparitively) messy flux of what (in contrasting relation) are described as “analogue” materials, this marked independence of symbol from substrate (an independence strong enough for Turing to anoint it as “universal”), their outright “indifference” (Schrimshaw 2012; Whitelaw 2013) and yet simultaneous reliance upon each other for functioning purposes, is a kind of “pure”, interstitial distance that Foucault’s formulation can be seen to point at. A key emergent “medial appetite” instigated here being the ongoing way in which various extensive and intensive qualities of the digital and analogue (the discrete and the continuous) are always in some respect “out of kilter” (Fuller 2005, p.82) with one another [footnote 4]. Out of such interstitials... the emergence of things such as the seemingly pathological drive to have the digital replicate the analogue and overcome the “lossy” gaps in such encounters; the constant pushing towards faster speeds, higher resolutions, “cleaner” signals, “smarter” machines and so on. Such interstitial sparks are often readily evident in even just the collision course nature of the names we give to these endeavours: “Internet” “of “Things”; “Artificial” “Intelligence”. A particularly productive agential cut this incision of the digital and its seismic materialisation in computational form, giving birth as it has to the “manic cutter known as the computer’ (Kittler 2010, p.228). One might even characterise such a method of divide and conquer as essential to most discursive systems, the method in question working to make entities executable according to their particular logics and delimited needs.

In relation to execution and its capacities of automation, the productive insterstitial gap involved instigates a potential drive towards making more things executable according to the logic of the executing process in question, of enclosing more entities and procedures into its discursive powers. This is a will towards what Foucault gives the name of "domination" to, the way in which sites of emergence can be understood to have the ability to harden into dominant forms of understanding and action. Domination is the consolidating of an emergent will, a species-like hardening of a system of interpretation and a bending of other wills and forces to its own compositional rules of interpretation. An engraving of power into the bodies it would make accountable to it. Such a self-amplifying will to power often gains further traction by convincing all parties involved of its own normative necessity and inevitability, pushing to the side any notions of the contingent accumulation of its situation and reconfigurability of its aggregate parts. In its peak stage, domination is when the executability of an emergent will—computational, cultural, political, etc.—is able to attain a level of everyday, almost automatic execution. Thus the norm critique of Foucault points towards an understanding of execution and executability as a productive ability by a process to make dominant and sustainable its particular discursive practices and requirements in a world full of many other potential processes.

Here one can turn to the discussions and examples that Wendy Chun (2016) suggests might be seen as paradigmatic of such computational habituations, such as her recent characterisations of “habitual new media” and the way in which such media have a notable quality of driving users into a cycle of “updating to remain the same.” For instance, the current habit of a continually shifting and often powerfully political set of terms of services that are rolled out by many of the currently dominant networked computing platforms to their accepting users. As Chun and others (e.g. Cayley 2013) point out, every such instance involves a certain abnegation of decision and responsibility. Nevertheless, the computational codes that underwrite more and more of contemporary cultural and political activities of the moment have a particular way of selling themselves as the best means with which to guarantee its subjcts a sustainable living in the digital era (whether what is being sold is a data mining process for being kept safe from terrorism or a particular social feature to be implemented in the name of a better collective experience). Again, a pincer-like setup can be seen to be in play in these impositions, whereby the tools in question are “increasingly privileged. . . because they seem to enforce automatically what they prescribe” (Chun 2011, p.91-2). Chun goes on to point out how such moves can be seen as a promotion of code as logos, “code as source, code as conflated with, and substituting for, action… [producing] (the illusion of) mythical and mystical sovereign subjects who weld together norm with reality, word with action.” (Chun 2011, p.92). As can be seen to be enacted in many of the ongoing crisis-oriented forms of governance today, in which a state of emergency of one kind or another is almost implied as the natural state of things, the application of computational modes of execution into such situations can be viewed as almost a perfection of the potential violence of the polis, a cutting out of the executive so as to become the executor (Chun 2011, p.101). Or as Geoff Cox (2012, p.100.1) describes it, “code exceeds natural language through its protocological address to humans and machines. It says something and does something at the same time—it symbolizes and enacts the violence on the thing: moreover, it executes it.” The shared and persuasive (or even conditional) chorus in this ensemble of human and nonhuman agents summed up in a Gilbert & Sullivan lyric: “Defer, Defer, to the Lord High Executioner!”

Such a pointing towards the ways in which automation involves the acceptance and thus removal of a moment of decision, also points to how the initialising of the decision engine of an executable computer program is both the initiating of a decision making process but also a termination, in that the moment a file is run decision making processes at the level of the executing code in question are thus set. Executing decisions become predefined, as do the parameters of the computable inputs and outputs. This is not to bemoan such a situation, but rather to consider this potent quality of computation as it is put into sites of encounter with the discursive entities that are brought within its range. Programmed habituation has its own particular qualities, some of which have to date been to purposefully mobilise forms of automation and their execution. Programmed execution’s most powerful effect is not only its deferral of responsibility but the expansion of power that can occur once such a deferral is normalised. Consider the qualities of deferral and sliding expansion that layers of code and their corresponding modes of abstraction promote in a simple example like the following:

- Execute that individual with this weapon.

- Execute this will according to this set of laws.

- Execute this group of individuals in accordance with this set of laws.

- Execute this program that executes this group of individuals in accordance with this set of laws.

sites of execution: invisible hand

shift.

“The true moment of shadow is the moment in which you see the point of light in the sky. The single point, and the shadow that has just gathered you in its sweep.”

—Thomas Pynchon, Gravity’s Rainbow, cited in Kittler 2010, p.229-230)

In histories of computing, the punched cards of Joseph Maria Jacquard’s looms and Charles Babbage and Ada Lovelace’s implementation of a punched card model of programming for the Babbage’s Analytical Engine make for popular launching points for discussions on the epistemic and material breakthroughs that led to the general purpose computers of the present. As writers such as Sadie Plant (1995) and Nicholas Mirzoeff (2016) have highlighted, in the midst of his transition from his work on the Difference Engine to that of the Analytical Engine, Baggage undertook an extended study of the workings and effects of automated machines used in various forms of manufacture, published in 1832 as On the Economy of Machinery and Manufactures. The text features extended explanations of the mechanical operations of such machines in the factories of the time and their accompanying forms of specialization, standardisation and systemisation, as reflected both within the factories and also in the economies emerging out of these mechanised factories as a whole. The influence of Adam Smith and nineteenth-centry liberalsim is particularly evident, with Babbage emphasising the benefits that might be achieved with greater division of labour: “the most important principle on which the economy of a manufacture depends on is the division of labour” (Babbage, cited in Mirzoeff p.4). As witnessed in one passage describing Gaspard de Prony’s work with turning unemployed servants and wig dressers into “computers” capable of calculating trigonometric by means of addition and subtraction, the idea can be seen to take hold on Babbage that such factories and carefully arranged forms of labour and its division might be understood as schematics and material setups for entirely mechanical forms of computation. Babbage would later look back on these early factories as prototypes for his “thinking machines,” with his naming of the “mill” as the central processing unit for his Analytical Engine figuring as the most obvious token of this influence on his computational and material thinking.

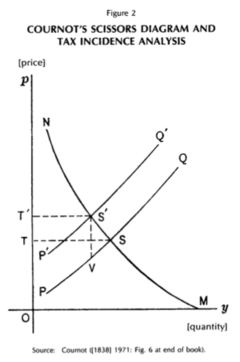

Smith and the rising stars of liberal economist thinkers in Babbage's time were notable for their formulations of economic rationality and self interest, devising models for interpreting human economic behaviour. Chief among these new models was that of geometrically plotted supply and demand curves that acted as mechanisms for determining ideal price points—the sites for execution—in relation to these variables. Armed with such powerful algorithmic methods for rational determinations of maximal economic “efficiency,” liberal economics as discipline, instrument and ideology takes off. As the example of Babbage indicates, and as examples such as Mirzoeff’s (“Control, Compute, Execute: Debt and New Media, The First Two Centuries”) recent reading of debt’s key role in new media’s origins and contemporary practices highlight, economics acts as a key and notably concurrent point of reference for computational thinking, its origins and practices (consider, for instance, John von Neumann’s contributions to both fields, or the economic substrate of venture capital money that sprouts the many silicon valleys of today's computational landscape).

For the purposes of this inquiry, it is Smith’s notion of “the invisible hand” that is of interest. Smith (ref) famously writes in The Wealth of Nations of the invisible hands as follows: “by directing that industry in such a manner as its produce may be of the greatest value, he intends only his own gain, and he is in this, as in many other cases, led by an invisible hand to promote an end which was no part of his intention.” As Foucault (2008) makes clear in his analysis of the origins of various forms of liberal economic thought (particularly those of “ordoliberal” and “neoliberal” forms), this is a blackboxing of economic responsibility and decision making, pronouncing economic causes as both autonomous and unknowable - and thus unregulatable/ungovernable. Furthermore, such an invisible instruction pointer is a handy processing device for paving the way for a kind of automation of the economy in the service of this liberated invisible hand. As in Chun’s examples, it is the blackboxing, the making invisible of the source in the name of a kind of “sorcery” that is key here. Foucault (2008, p.279-80):

Here we are at the heart of a principle of invisibility. In other words, what is usually stressed in Smith’s famous theory of the invisible hand is, if you like, the “hand,” that is to say, the existence of something like providence which would tie together all the dispersed threads. But I think the other element, invisibility, is at least as important. Invisibility is not just a fact arising from the imperfect nature of human intelligence which prevents people from realizing that there is a hand behind them which arranges or connects everything that each individual does on their own account. Invisibility is absolutely indispensable. It is an invisibility which means that no economic agent should or can pursue the collective good.

A conveniently colour-blind, driverless system in which one can happily push to the side the thorny ethical issues of systemic bias, discrimination and the many age old and ongoing feedback loops of the seemingly tightly interwoven cause-effects that arise out of the blackboxed interstitials of such issues.

Another compelling example of shifting the site of execution that queries execution as it transpires across economics and its computation, can be found in the artist duo YoHa (Graham Harwood and Matsuko Yokokoji) work Invisible Airs, feature a series of “contraptions” [footnote 5] aimed at performing and largely opaque contours of Bristol City Council’s expenditures as laid out in its newly minted open data initiatives. Acknowledging the ongoing historical and social formations of gaps between knowledge and power—in this case the “gap between the wider public's perception of data” (as well as a contemporary “form of indifference toward the expectations of this kind of open data initiative”)—the pieces in this project aim to create “A partial remedy for this indifference” through “making data more vital” while also “taking a more critical view of transparency itself” (Harwood 2015, p.93).

As with much of YoHa’s work, the project resulted in several outputs, with a core interrogation of the dataset being that of expenditures of over £500...... ((another 1500 or so words, mostly on Invisible Airs, words hopefully soon to be put in place in advance discussion at Malmö event))

sites of execution: interface

notes

[3]: Entstehungsherd has also been translated as “breeding ground”, hinting both towards the medical sense of Herd as the “seat” of “focus” of a disease and also to its further designation as a site of any biological activity that makes something emerge (Emden 2014, p.138). This is part of a general transition in Nietzsche’s thinking, one that shows up most clearly in his Genealogy of Morals, whereby he aims to usurp notions around *Ursprung* (origin) with that of Entstehungsherd. Foucault’s description of the interstice can itself be further compared with his well known formulation of the appartus (dispositif) and its “grids of intelligibility,” in which systemic connections between heterogenous ensembles of elements are made productive. See Matteo Pasquinelli’s (2015) helpful commentary, “What an Apparatus is Not: On the Archeology of the Norm in Foucault, Canguilhem, and Goldstein, for a genealogy of Foucault’s employment of dispositif, one that like von Uexküll’s Umwelt sees a tracing back of continental philosophy to an earlier breeding ground of biophilosophical ruminations.

[4]: A little note on the potential quantum bits, some Kittler, a move from dialectics to trialectics (Jorn)?!...

[5]: Harwood and YoHa descriptions of contraptions...

references

Cayley, John. “Terms of Reference & Vectoralist Transgressions: Situating Certain Literary Transactions over Networked Services.” Amodern 2: Network Archaeology. 2013. Web. http://amodern.net/article/terms-of-reference-vectoralist-transgressions/

Chun, Wendy Hui Kyong. “Crisis, Crisis, Crisis, or Sovereignty and Networks”. Theory, Culture & Society, Vol. 28(6):91-112, Singapore: Sage, 2011. Print.

Chun, Wendy Hui Kyong. Updating to Remain the Same: Habitual New Media. Cambridge, Massachussetts: The MIT Press, 2016. Print.

Cox, Geoff. Speaking Code: Coding as Aesthetic and Political Expression. Cambridge, Massachussetts: The MIT Press, 2012.

Cox, Geoff. “Critique of Software Violence.” Concreta 05, Spring 2015. Web. http://editorialconcreta.org/Critique-of-Software-Violence-212

Emden, Christian J. Nietzsche's Naturalism: Philosophy and the Life Sciences in the Nineteenth Century. Cambridge University Press, 2014. Print.

Foucault, Michel. The Foucault Reader. Ed. Paul Rabinow. New York: Pantheon, 1984. Print.

Foucault, Michel. The Birth of Biopolitics: Lectures at the Collège de France 1978–1979. Trans. Graham Burchell. New York: Palgrave Macmillan, 2008.

Fuller, Matthew. Media Ecologies: Materialist Energies in Art and Technoculture. Cambridge, Massachusetts: The MIT Press, 2005. Print.

Harwood, Graham. Database Machinery as Cultural Object, Art as Enquiry. Doctoral dissertation. Sunderland: University of Sunderland, 2015. Print.

Howse, Martin. “Dark Interpreter – Provide by Arts for the hardnesse of Nature.” Occulto Magazine, Issue δ, 2015.

Kittler, Friedrich. Optical Media: Berlin Lectures 1999. Tr. Anthony Enns. Cambridge, England: Polity Press, 2010.

Mirzoeff, Nicholas. “Control, Compute, Execute: Debt and New Media, The first Two Centuries.” After Occupy. Web. http://www.nicholasmirzoeff.com/2014/wp-content/uploads/2015/11/Control-Compute-Execute_Mirzoeff.pdf

Pasquinelli, Matteo. "What an Apparatus is Not: On the Archeology of the Norm in Foucault, Canguilhem, and Goldstein." Parrhesia, n. 22, May 2015, pp. 79-89. Web. http://www.parrhesiajournal.org/parrhesia22/parrhesia22_pasquinelli.pdf

Plant, Sadie. ”The Future Looms: Weaving Women and Cybernetics.” In Mike Featherstone and Roger Burrows (eds.), Cyberspace/Cyberbodies/Cyberpunk: Cultures of Technological Embodiment. London: Sage Publications, 1995.

Schrimshaw, William Christopher. “Undermining Media.” artnodes, No.12: Materiality, 2012. Web. http://artnodes.uoc.edu/index.php/artnodes/article/view/n12-schrimshaw

Turing, Alan M. ”On Computable Numbers, with an application to the Entscheidungsproblem.” Proceedings of the London Mathematical Society, Second Series, V. 42, 1936, p. 249. Print.

Whitelaw, Mitchell. “Sheer Hardware: Material Computing in the Work of Martin Howse and Ralf Baecker.” Scan: Journal of Media Arts Culture, Vol.10 No.2, 2013. Web. http://scan.net.au/scn/journal/vol10number2/Mitchell-Whitelaw.html